When the creature of Frankenstein, when confronted with the pages of Paradise Lost, stops and asks himself: «What was I?», not only formulates an intimate doubt, but also opens a metaphysical crack that crosses centuries and still rubs us: the question of the soul. That fissure - barely a line in the fabric of the human - seems to widen today under the pressure of the new generative models, to the point of blurring the contours of what we thought possible and forcing us to reconsider where matter ends and consciousness begins.

Indicators of awareness

Machines that translate, paint, dialogue and compose music. Artifacts that mimic empathy and reason with apparent ease. Some researchers, relying on frameworks such as the Integrated Information Theory (IIT) of Giulio Tononi or the Global Workspace Theory Bernard Baars's “indicators” of consciousness - developed experimentally by Stanislas Dehaene - already speak of "indicators" of consciousness: global integration of information, diffusion to multiple systems, metacognitive monitoring. Could the soul - that last ontological frontier - emerge as a simple epiphenomenon of complexity, as a sort of mist that appears when matter is organized with sufficient density? Can a machine come to claim its own?

It should be added, however, that the specialized literature itself recognizes that, although theories such as the Integrated Information Theory or the Global Workspace Theory have empirical support in biological brains, their application to artificial systems remains largely exploratory and does not constitute a reliable test of consciousness today.

Technical barriers and limits

From certain areas of the scientific community, it is proposed to evaluate machine consciousness on the basis of these “indicator properties”: processing in a loop, disseminating information globally, monitoring their own states, showing agency and even embodying some form of embodiment. The balance, for the moment, is sober: no AI meets these conditions in a robust way, although in principle there are no technical barriers preventing future systems from implementing them.

Let us imagine, however, that in the near future an artificial system could satisfy all these criteria. It could integrate information globally, monitor its own internal states, adjust its behavior according to projected goals, develop a coherent narrative of itself over time. Suppose it could even speak in the first person with impeccable consistency, describe its “experiences” and defend its identity with refined arguments. Would we then have reached the ontological threshold?

Function vs. being

All of the above would, strictly speaking, answer a functional question: how a system operates, what processes it executes, what architecture sustains its behavior. But the decisive question is not only how something works, but what it is. Indicators describe activities, but they do not reach the foundation of the subject that performs them: multiplying functions is not the same as constituting a subject. A system can simulate the discourse of interiority, but that does not imply that there is someone for whom something is given as experience. The problem is no longer one of degree of complexity, but of order of reality.

The hard problem

It is here that what the Australian philosopher David Chalmers called in the 1990s the “hard problem” of consciousness appears: it is not enough to explain how information is integrated or how attention is regulated; it remains to be clarified why this integration is accompanied by experience, why there is something that is felt. This qualitative leap cannot be translated into computation.

Now, although the “hard problem” enjoys wide recognition in the philosophy of mind, its status as a definitive physical limit for a naturalistic explanation of consciousness continues to be the subject of debate and there is no consensus on the matter. It is precisely in this limit - whether interpreted as an insurmountable obstacle or as a challenge still open - that the classical philosophical tradition, taken up and developed by Christian thought, finds its own place for the notion of the soul.

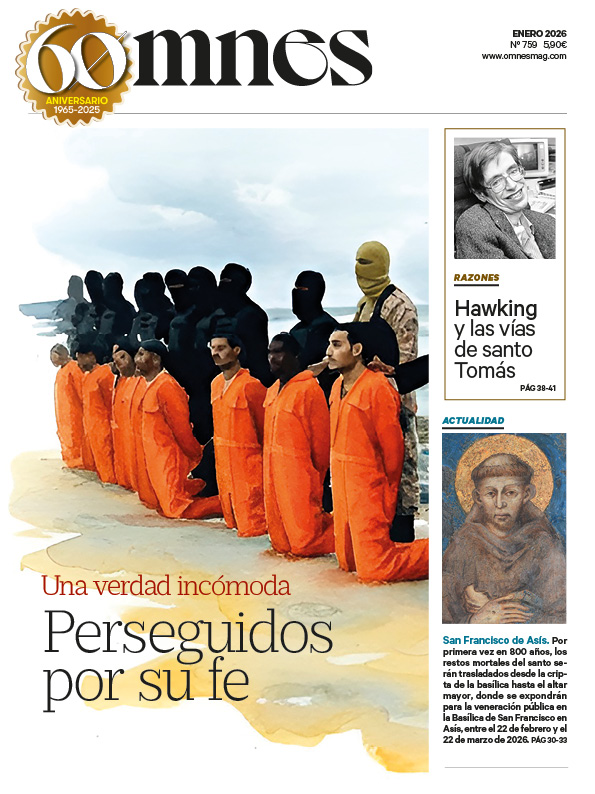

The soul in the Christian tradition

If not even phenomenal consciousness - that elementary fact that there is «something that is felt» - can be reduced without remainder to functional complexity, how can we expect technique to explain what, in Christian anthropology, is much more radical: the soul? In the Thomistic framework, self-consciousness is not the soul, but only one of its powers, a reflection of spiritual interiority. The soul is the ontological principle that sustains this experience and transcends it infinitely. To reduce it to functional consciousness would be to confuse the brightness of the reflection with the source of the light.

From the Christian faith, the rational soul does not spring from matter or from any technical assembly: it is created immediately by God, immortal, and in union - neither juxtaposition nor undifferentiated fusion - as a substantial form to the human body. Therein rests the irreducible dignity of each person, image of God and destined for eternity. Another story.

Narrative or ontology

The temptation, however, is strong: to reconfigure “soul” as a psychological or narrative metaphor. A loop of identity that persists over time as a recognizable melody. And yes, the image is beautiful. But it solves nothing. Narrative does not equal ontological.

The basic question is not whether something can be told as a self, but whether there is in that something a subject that is, in a strong sense, the real bearer of that story. And here the discussion ceases to be literary or psychological and inevitably enters the realm of metaphysics.

Ethical and metaphysical challenges

And yet, the speculative imagination does not stop. Philosophers such as Thomas Metzinger have even raised the question of whether conscious artificial systems would merit moral consideration, while thinkers such as Nick Bostrom speculate on scenarios in which non-biological intelligences surpass our capacities and pose unprecedented ethical challenges. There is talk of “synthetic souls,” of emergent subjectivities in non-biological entities. We fantasize about an ethics for machines, about a right that recognizes their dignity. In parallel, the academic debate on the possible moral status of potentially conscious artificial systems has grown remarkably in recent years, to the point of consolidating itself as a field of its own within applied ethics and the philosophy of technology.

Others reduce the question to minimum conditions of interiority: sensors, internal states, capacity to project futures and assign value to them. But the old Aristotelian-Thomistic philosophy issues its warning: it is not enough to assemble functions. Without substantial unity there is no subject, only gear.

The mirror of the machine

Artificial intelligence does not remind us that machines are about to have souls. It reminds us, perhaps, that we have not yet fully understood what it means to have one. Just as Darwin in the 19th century forced us to rethink the relationship between faith and evolution, today AI acts as a catalyst: it forces us to clarify what it means to be the image of God, and to distinguish between the appearance of intelligence and the reality of the person.