Artificial Intelligence (AI) is becoming a reality that permeates more and more aspects of our lives. From my experience as a school chaplain I have had the opportunity to reflect on this fascinating crossroads between technology and morality. When girls came to my confessional for the first time repenting for having "copied" work on AI I thought it was time to understand it better.

It can shed light on the Vatican document, Antiqua era Novaissued in January by two dicasteries, working together: the Dicastery for the Doctrine of the Faith and the Dicastery for Culture and Education.

When AI enters the intimate

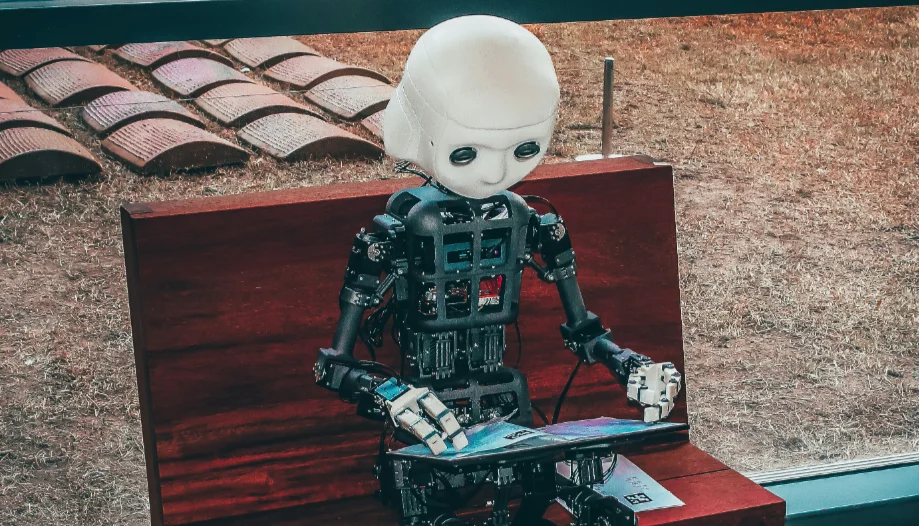

Until now we have associated AI with efficiency, task automation and processing large volumes of data. And certainly, AI remains an invaluable tool for personal and professional productivity, helping us organize our lives, manage schedules or even generate code. However, what the most recent studies reveal is a surprising shift towards much more emotional and personal uses of AI.

Today, one of the main uses of AI is no longer just technical or productivity, but has escalated into spheres such as therapy and companionship. People turn to AI to seek emotional support, to have a "listening ear," or even to converse with simulations of deceased loved ones. Another prominent use is the search for purpose and self-development, with people consulting AI for guidance on values, goal setting or philosophical reflection, even engaging in "Socratic dialogues" with these tools.

Digital Companion

This phenomenon deeply challenges us. AI has become a kind of "digital companion" or "thought partner", capable of personalizing responses and adapting to our emotional states. Users are no longer just passive consumers, but "co-creators" who refine their interactions for more nuanced responses.

This is where, as he warns us Antiqua era NovaWe must be especially vigilant not to lose track of our own humanity. The fact that AI can simulate empathetic responses, offer companionship or even "help" in the search for purpose does not mean that it possesses true empathy or that it can bestow meaning on life.

Artificial intelligence, however advanced, is not capable of reaching human intelligence, which is also shaped by bodily experiences, sensory stimuli, emotional responses and authentic social interactions. AI operates on computational logic and quantitative data; it does not feel, it does not love, it does not suffer, it has no consciousness and no free will. Therefore, it cannot replicate moral discernment or the ability to establish authentic relationships.

Why is it crucial to understand this?

Empathy is intrinsically human: True empathy arises from the ability to share the other's feeling, to understand their pain or joy from our own embodied experience. AI can process a wealth of data about human emotions and generate responses that look like empathetic, but not feel or experience those emotions. It is a simulation, not a reality. Relying on AI for empathy is like expecting a map to give you the experience of walking a path.

The meaning of life is born of relationship and transcendence: the search for meaning, life purpose, fulfillment, are not found in an algorithm or in a machine-generated response. These are born from our authentic relationships with God and with others, from our capacity to love and be loved, from our sacrifice, from the experience of shared pain and joy, from the surrender to an ideal that transcends us. As a priest, I see every day how true fulfillment is found in surrender and in the encounter with the other, something that AI, by definition, cannot offer. It is in the interpersonal relationship, often imperfect and challenging, that we are forged and find deep meaning.

Risks of emotional and spiritual dependence: If we begin to delegate to AI our need for companionship, emotional support or even our search for meaning, we run the risk of developing a dependence that leads us away from genuine sources of fulfillment. We may settle for a "pseudo-companionship" that will never challenge us to grow in virtue, to forgive, to love unconditionally or to transcend our own limits.

The risks of anthropomorphization and the richness of human relationships

The tendency to anthropomorphize AI blurs the line between human and artificial. The use of chatbotsfor example, can model human relationships in a utilitarian way.

The risks are clear:

- Dehumanization of relationships: If we expect the same perfection and efficiency from people as a chatbot, we can impoverish the patience, listening and vulnerability that define authentic relationships.

- Reduction of the human being: Seeing AI as "almost human" can lead us to see the human being as a simple algorithm, ignoring our freedom, soul and capacity to love.

- Impoverishment of the teacher's role: The teacher's mission is much more than imparting data; it is to form criteria, inspire and accompany personal and moral growth.

- Delegation of moral discernment: We may be tempted to yield to the AI for ethical decisions that are ours alone.

How to deal with them?

- Critical awareness: Educate about what AI is and what it is not, demystifying its capabilities.

- Revalue the human: Promote spaces for genuine interaction, where the richness of the imperfection and complexity of human relationships can be appreciated.

- Dignify the educator: Emphasize his or her irreplaceable role as a trainer of people.

- Educate for freedom and responsibility: Insist that moral decision-making is our prerogative. AI is a tool; ethical choice is ours.

An Ongoing Dialogue: Where Do We Leave the Soul?

The irruption of Artificial Intelligence invites us to an unavoidable existential dialogue, beyond technological fascination or simple efficiency. If it can simulate a digital "embrace" or a philosophical "guide", then where is the irreplaceable depth of the human relationship, of the empathy born of flesh and spirit, and of the transcendence that only the human soul can yearn for and attain?

The real challenge is not merely technical, but anthropological and spiritual: to discern with radical honesty whether we are unconsciously delegating to an algorithm what only the encounter with others and with God can fulfill, risking impoverishing our own humanity in the pursuit of a digital comfort that can never fill the emptiness of the heart.